Lecture: Artificial Intelligence (AI)

📚 Unlocking the Future: A Deep Dive into AI

By: M. Yaseen Rashid

✨ Advanced Concepts, Practical Insights & Future Horizons

What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think, reason, and make decisions like humans. It represents a paradigm shift in how technology interacts with the world, moving beyond mere automation to mimic complex cognitive functions.

At its core, AI aims to create systems capable of performing tasks that typically require human intellect. This includes learning, problem-solving, decision-making, perception, and understanding human language.

Core Pillars of Modern AI:

- ✅ Learning from Data (Machine Learning): The ability of AI systems to analyze vast amounts of data to identify patterns, correlations, and insights without explicit programming for every scenario. This adaptive learning is fundamental.

- ✅ Adaptation and Generalization: Unlike rigid, traditional software, AI models can adjust their behavior and predictions based on new, unseen information, enabling them to generalize learned concepts to novel situations.

- ✅ Human-Like Task Execution: AI can execute complex tasks that traditionally required human intellect, such as natural language understanding, visual perception, and strategic game-playing, often with superior speed and accuracy.

- ✅ Autonomy: AI systems can operate with minimal human intervention, making decisions and taking actions based on their learned intelligence within defined parameters.

The Evolution of AI: Past, Present, and Key Milestones

The journey of Artificial Intelligence is a rich tapestry woven with philosophical inquiries, groundbreaking theoretical work, periods of rapid progress, and challenging "AI winters." Understanding its history is crucial to grasping its current state and future potential.

The Past: Foundations and Early Promise (1940s - 1980s)

- ✅ 1943: McCulloch & Pitts' Neural Network: Warren McCulloch and Walter Pitts published a paper on "A Logical Calculus of Ideas Immanent in Nervous Activity," laying the groundwork for artificial neural networks.

- ✅ 1950: Turing Test: Alan Turing proposed the "Imitation Game" (now known as the Turing Test) as a criterion for intelligence, fundamentally questioning "Can machines think?"

- ✅ 1956: Dartmouth Conference: The official birth of AI as a field. John McCarthy coined the term "Artificial Intelligence." Researchers like Marvin Minsky, Allen Newell, Herbert A. Simon, and Arthur Samuel laid out the ambitious goals for AI research. Early focus was on symbolic AI and logic-based systems.

- ✅ 1960s-70s: Expert Systems: Rise of rule-based systems (e.g., DENDRAL, MYCIN) designed to mimic human expert decision-making in specific domains. While successful in narrow tasks, they lacked common sense and scalability.

- ✅ 1980s: First AI Winter: A period of reduced funding and interest due to unmet expectations and limitations of early AI systems.

The Present: Revival and Revolution (1990s - Today)

- ✅ 1990s - 2000s: Machine Learning Resurgence: Renewed interest driven by increased computational power, larger datasets, and new algorithms (e.g., Support Vector Machines, decision trees). IBM's Deep Blue defeating chess grandmaster Garry Kasparov in 1997 was a landmark moment.

- ✅ 2012: AlexNet and Deep Learning Boom: The ImageNet breakthrough by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, using a deep convolutional neural network, marked the beginning of the deep learning era. This demonstrated unprecedented performance in image recognition.

- ✅ 2010s - Present: Big Data, GPUs, and Cloud Computing: The confluence of massive datasets, powerful GPUs for parallel processing, and accessible cloud infrastructure fueled rapid advancements in deep learning.

- ✅ Generative AI & Large Language Models (LLMs): Recent years have seen an explosion in generative AI (e.g., GANs, Transformers) and LLMs (e.g., GPT series, BERT, Gemini, Claude). These models can generate human-like text, images, code, and more, pushing the boundaries of what AI can create and understand.

- ✅ Multimodality: The current frontier involves AI systems that can seamlessly process and generate content across multiple modalities (text, image, audio, video), leading to more holistic intelligence.

Key Figures in AI History:

| Individual | Contribution |

|---|---|

| Alan Turing | Pioneering theoretical computer scientist, proposed the Turing Test. |

| John McCarthy | Coined the term "Artificial Intelligence," key figure at Dartmouth Conference. |

| Marvin Minsky | Co-founder of MIT's AI Lab, pioneer in neural networks and symbolic AI. |

| Geoffrey Hinton | "Godfather of Deep Learning," crucial work on neural networks and backpropagation. |

| Yann LeCun | Pioneer in Convolutional Neural Networks (CNNs), critical for computer vision. |

| Yoshua Bengio | Leading researcher in deep learning and neural networks for language. |

How Does AI Work? (Under the Hood)

AI's functionality stems from a sophisticated interplay of data, advanced computational models (algorithms), and iterative learning processes. It's a continuous cycle of input, processing, output, and refinement.

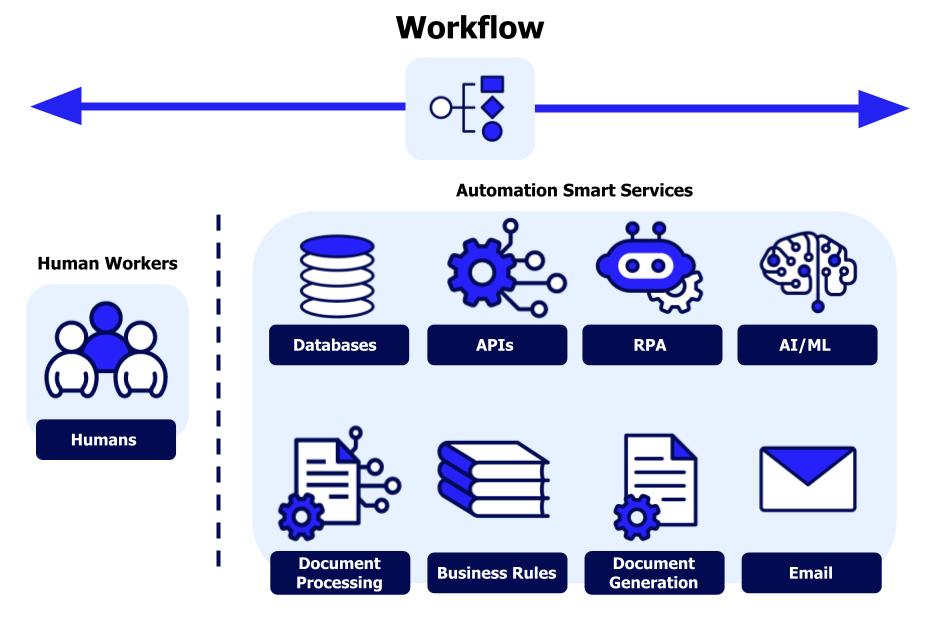

The AI Workflow:

- ✅ 1. Data Ingestion: AI systems require massive, high-quality, and diverse datasets. This data (e.g., structured tables, unstructured text, images, audio, video, sensor readings) forms the raw material for learning. Data quality, quantity, and ethical sourcing are paramount.

- ✅ 2. Data Preprocessing: Raw data is often noisy, incomplete, or inconsistently formatted. Preprocessing involves cleaning, transforming, normalizing, and feature engineering to make it suitable for algorithmic consumption. This step significantly impacts model performance.

- ✅ 3. Algorithm Selection & Model Architecture: Choosing the right AI model (algorithm) depends on the problem. This could range from traditional machine learning models (e.g., Decision Trees, Support Vector Machines) to complex deep learning architectures (e.g., Convolutional Neural Networks, Recurrent Neural Networks, Transformers).

- ✅ 4. Model Training: This is the core learning phase. The chosen algorithm is exposed to the prepared data, adjusting its internal parameters (weights and biases in neural networks) to minimize errors and optimize performance on the specific task. This often involves iterative optimization techniques like gradient descent.

- ✅ 5. Model Evaluation: After training, the model's performance is rigorously tested on unseen data (validation and test sets) to assess its generalization capabilities and identify potential overfitting or underfitting. Metrics (accuracy, precision, recall, F1-score, etc.) are used.

- ✅ 6. Deployment and Inference: A validated model is deployed into a production environment, where it receives new, real-world data and makes predictions or decisions (inference). This could be an API endpoint, an embedded system, or a cloud service.

- ✅ 7. Monitoring & Retraining: AI models can degrade over time due to data drift or concept drift. Continuous monitoring of performance is essential. When performance drops, the model needs to be retrained on updated data to maintain accuracy.

Key AI Algorithms and Their Paradigms

AI is powered by a diverse array of algorithms, each suited for different types of problems and data. These algorithms can be broadly categorized by their learning paradigms.

1. Supervised Learning: Learning from Labeled Examples

In supervised learning, the AI model is trained on a dataset where both the input features and the corresponding correct output labels are provided. The model learns to map inputs to outputs based on these examples.

- ✅ Linear Regression / Logistic Regression: Fundamental algorithms for predicting continuous values (regression) or classifying binary outcomes (logistic regression). Simple yet powerful baselines.

- ✅ Decision Trees / Random Forests / Gradient Boosting (e.g., XGBoost): Tree-based algorithms that make decisions by splitting data based on features. Ensemble methods like Random Forests and Gradient Boosting combine multiple trees for improved accuracy and robustness.

- ✅ Support Vector Machines (SVM): A powerful classification algorithm that finds an optimal hyperplane to separate data points into different classes with the largest margin.

- ✅ Neural Networks (incl. Deep Learning): Composed of interconnected nodes (neurons) organized in layers. They learn hierarchical representations from data. Deep Learning refers to neural networks with many hidden layers, capable of capturing highly complex patterns.

2. Unsupervised Learning: Discovering Hidden Patterns in Unlabeled Data

Unsupervised learning deals with unlabeled data, aiming to discover inherent structures, patterns, or relationships within the dataset without explicit output guidance.

- ✅ Clustering (e.g., K-Means, DBSCAN): Algorithms that group similar data points together into clusters based on their inherent characteristics. Used for market segmentation, anomaly detection.

- ✅ Dimensionality Reduction (e.g., PCA, t-SNE): Techniques to reduce the number of features in a dataset while retaining most of the important information. Useful for visualization and speeding up learning.

- ✅ Association Rule Mining (e.g., Apriori): Used to find relationships between variables in large databases, often seen in "market basket analysis" (e.g., "customers who bought X also bought Y").

3. Reinforcement Learning: Learning by Interaction and Reward

Reinforcement learning (RL) involves an agent learning to make sequential decisions in an environment to maximize a cumulative reward. It learns through trial and error, much like how humans learn by experience.

- ✅ Q-Learning / SARSA: Value-based RL algorithms that learn an optimal policy by estimating the "value" of taking a particular action in a given state.

- ✅ Policy Gradient Methods (e.g., REINFORCE, A2C, PPO): Directly learn a policy that maps states to actions, often used in complex environments like robotics and game playing (e.g., AlphaGo).

4. Specialized AI Paradigms:

- ✅ Natural Language Processing (NLP): Algorithms and models (e.g., Transformers, BERT, GPT) specifically designed to process, understand, and generate human language.

- ✅ Computer Vision: Algorithms (e.g., CNNs, R-CNNs, YOLO) focused on enabling machines to "see" and interpret visual data from images and videos.

- ✅ Generative Adversarial Networks (GANs): A pair of neural networks (Generator and Discriminator) that compete to generate realistic data, used for image synthesis, data augmentation, etc.

How to Build AI: A Practical Guide (Python, APIs & More)

Building AI systems, from simple models to complex applications, involves a systematic approach combining programming, data science, and domain expertise. Python is the de-facto standard due to its extensive ecosystem.

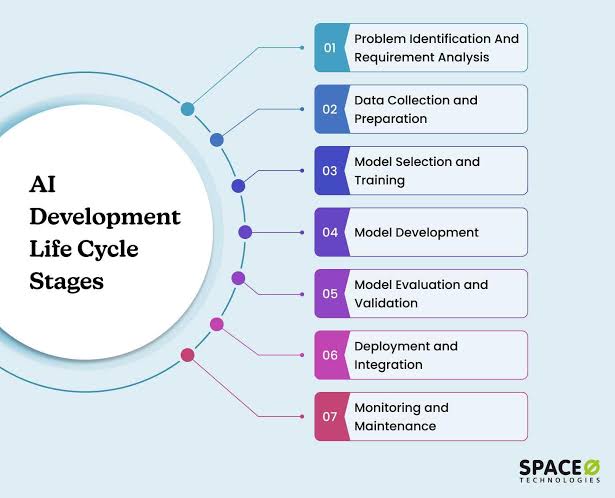

The AI Development Lifecycle:

- ✅ Step 1: Problem Definition & Data Acquisition:

- Clearly define the problem: What specific task will the AI solve? (e.g., "predict house prices," "classify customer reviews," "generate marketing copy").

- Identify and acquire data: Source relevant datasets (public, private, generated). Ensure data quality, relevance, and compliance.

- ✅ Step 2: Data Preprocessing & Feature Engineering:

- Clean Data: Handle missing values, outliers, and inconsistencies.

- Transform Data: Normalize, scale, or encode data (e.g., one-hot encoding for categorical variables).

- Feature Engineering: Create new features from existing ones to enhance model performance (e.g., combining date components into "day of week"). This requires domain knowledge.

- ✅ Step 3: Model Selection & Training:

- Choose an appropriate algorithm: Based on problem type (classification, regression, clustering) and data characteristics.

- Select a framework/library: Python's ecosystem is vast (TensorFlow, PyTorch, Scikit-learn).

- Train the model: Feed prepared data to the algorithm, allowing it to learn patterns. Hyperparameter tuning is crucial here (e.g., learning rate, number of layers).

- Cross-validation: Use techniques like K-fold cross-validation to robustly assess model performance and prevent overfitting.

- ✅ Step 4: Model Evaluation & Refinement:

- Evaluate performance: Use metrics relevant to the problem (accuracy, precision, recall, F1-score for classification; RMSE, MAE for regression).

- Analyze errors: Understand where the model fails and why. This guides further refinement.

- Iterate: Adjust preprocessing, features, algorithm, or hyperparameters based on evaluation results.

- ✅ Step 5: Deployment & Monitoring:

- Deploy the model: Integrate the trained model into an application or service. This often involves creating an API endpoint.

- Monitor performance in production: Track model predictions, actual outcomes, and data drift to ensure continued effectiveness.

- Retrain regularly: Update the model with new data to maintain its relevance and accuracy over time.

Key Tools & Technologies:

| Category | Examples & Usage |

|---|---|

| Programming Language | Python: Dominant language due to its simplicity, vast libraries, and large community. Essential for data manipulation, model development, and scripting. |

| Machine Learning Frameworks |

|

| Data Manipulation & Analysis |

|

| APIs (Application Programming Interfaces) |

|

| Development Environments |

|

| Cloud Platforms |

|

Building AI is not just about coding; it's about understanding data, iteratively refining models, and deploying intelligent solutions that solve real-world problems.

Comprehensive Benefits of AI:

Artificial Intelligence offers a myriad of transformative benefits across various sectors, revolutionizing industries, enhancing efficiency, and improving human capabilities. Its impact goes far beyond simple automation.

- ✅ Hyper-Automation & Efficiency: AI excels at automating repetitive, high-volume, and time-consuming tasks across industries (e.g., data entry, customer support routing, manufacturing assembly), significantly reducing operational costs and freeing up human capital for more complex, creative, and strategic work.

- ✅ Enhanced Accuracy & Speed: AI systems can process vast amounts of information and make decisions or predictions much faster and with greater precision than humans, minimizing human error in critical applications like medical diagnosis or financial fraud detection.

- ✅ Advanced Decision-Making & Insights: By analyzing complex, multi-dimensional datasets that are too large for human comprehension, AI can uncover hidden patterns, correlations, and predictive insights, enabling more informed, data-driven, and robust decision-making in business, science, and governance.

- ✅ Personalization at Scale: AI drives highly customized experiences across numerous domains, from tailored content recommendations on streaming platforms (e.g., Netflix, Spotify), personalized learning pathways in education, to individualized marketing messages and product suggestions in e-commerce.

- ✅ Innovation & New Capabilities: AI is a powerful engine for innovation. It enables the creation of entirely new products, services, and scientific discoveries that were previously impossible, such as generative art, novel drug compounds, and sophisticated autonomous systems.

- ✅ Accessibility & Inclusivity: AI-powered tools like speech-to-text, real-time translation, and assistive technologies (e.g., AI-driven navigation for visually impaired) can significantly enhance accessibility and bridge communication gaps for diverse populations.

- ✅ Predictive Analytics & Proactive Action: AI models can forecast future trends (e.g., equipment failures, market shifts, disease outbreaks), allowing organizations and individuals to take proactive measures, optimize resource allocation, and mitigate risks before they materialize.

- ✅ Resource Optimization: AI can optimize resource consumption (energy, water, raw materials) in manufacturing, agriculture, and smart cities, leading to greater sustainability and cost savings.

Advanced AI Applications Across Industries:

AI is deeply embedded in the fabric of modern society, driving transformative changes across virtually every sector. Its applications are continuously evolving, making systems smarter, more efficient, and more responsive.

| Sector | Advanced AI Applications & Examples |

|---|---|

| Healthcare & Medicine |

|

| Finance & Banking |

|

| Automotive & Transportation |

|

| Retail & E-commerce |

|

| Media & Entertainment |

|

| Cybersecurity |

|

Leading AI Players & Innovators:

- ✅ Google (DeepMind & Google AI): Pioneering research in LLMs (Gemini), reinforcement learning (AlphaGo, AlphaFold), and multimodal AI.

- ✅ OpenAI: Leading the generative AI revolution with ChatGPT, DALL·E, and Sora, pushing capabilities in natural language and creative content generation.

- ✅ Microsoft (Azure AI & OpenAI Partnership): Integrating AI across its product suite (Copilot) and offering extensive cloud AI services.

- ✅ Anthropic: Developing large language models (Claude series) with a strong emphasis on AI safety and constitutional AI.

- ✅ Amazon (AWS AI/ML & Alexa): Providing extensive cloud AI infrastructure and services, and powering the widely used Alexa voice assistant.

- ✅ Meta (FAIR - Fundamental AI Research): Advancing research in computer vision, NLP, and foundational models, including open-source initiatives like Llama.

- ✅ NVIDIA: Dominant in AI hardware (GPUs) and software platforms (CUDA, cuDNN) that enable rapid deep learning training and inference.

The Frontier of AI: Current Most Powerful Systems

Defining the "most powerful" AI is complex, as different models excel in different domains. However, certain systems currently represent the cutting edge in terms of scale, capabilities, and impact:

- ✅ Large Language Models (LLMs):

- OpenAI's GPT-4.5 (and beyond): Continues to be a benchmark for sophisticated text generation, reasoning, coding, and multimodal understanding (with vision capabilities).

- Google Gemini Ultra 1.5: A highly capable, multimodal model excelling in long-context understanding (processing vast amounts of text, video, and audio), advanced reasoning, and code generation.

- Anthropic's Claude 3 Opus: Recognized for its strong performance in complex reasoning, nuanced content creation, and robust safety features, often rivaling GPT-4 and Gemini in various benchmarks.

- ✅ Multimodal AI: Models capable of processing and generating information across multiple data types (text, image, audio, video) simultaneously. Gemini is a prime example. OpenAI's Sora is a leading example in text-to-video generation.

- ✅ Specialized AI Systems:

- Google DeepMind's AlphaFold: Revolutionized protein structure prediction, accelerating biological research.

- Tesla's Full Self-Driving (FSD) AI: An advanced, real-world application of computer vision and decision-making AI for autonomous navigation.

- Gato (DeepMind): A single AI agent that can perform hundreds of different tasks, from playing games to controlling robots.

The "most powerful" AI is a dynamic title, continuously evolving with rapid advancements in research and development. The trend is towards larger models, multimodal capabilities, and more sophisticated reasoning abilities.

The Future of AI: Trends and Transformative Potential

The trajectory of AI promises profound transformations, leading to a future where intelligent systems are even more integrated into our lives, driving unprecedented advancements and posing new challenges.

Key Trends Shaping AI's Future:

- ✅ Hyper-Personalization & Adaptive AI: AI will become increasingly tailored to individual users, adapting interfaces, content, and services dynamically based on nuanced understanding of preferences and context.

- ✅ Ubiquitous AI & Edge Computing: AI will move beyond cloud data centers to everyday devices (smartphones, IoT sensors, wearables), enabling real-time processing, enhanced privacy, and reduced latency.

- ✅ Multimodal AI Integration: Future AI systems will seamlessly process and generate information across all modalities (text, audio, video, sensor data, haptics), leading to more natural and intuitive human-AI interaction.

- ✅ AI for Scientific Discovery: AI will accelerate breakthroughs in fundamental science, from materials science and quantum computing to climate modeling and astrophysics, by identifying complex patterns and generating hypotheses beyond human capacity.

- ✅ Ethical AI & Explainable AI (XAI): Increasing focus on developing AI that is fair, transparent, accountable, and interpretable. XAI will be crucial for building trust and understanding how complex models make decisions.

- ✅ AI in Robotics & Automation: More sophisticated robotic systems will emerge, combining advanced AI for perception, manipulation, and navigation with dexterous hardware, leading to significant automation in diverse sectors.

- ✅ Human-AI Collaboration: Rather than full replacement, the future will see more symbiotic relationships where AI acts as an intelligent assistant, augmenting human capabilities in creative, analytical, and strategic tasks.

Long-Term Visions & Potential Impact:

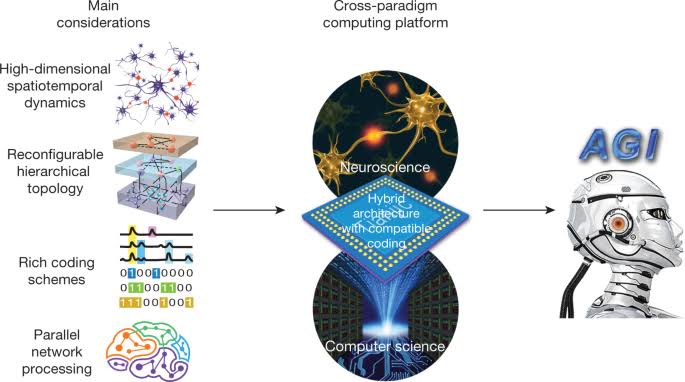

- ✅ Accelerated Progress Towards AGI & ASI: While timelines are debated, continued progress in deep learning, reinforcement learning, and computational resources could lead to the emergence of Artificial General Intelligence (AGI) and eventually Artificial Super Intelligence (ASI).

- ✅ Transformation of Industries: Nearly every industry will be fundamentally reshaped by AI, leading to new business models, optimized processes, and a reimagining of human work.

- ✅ Societal Implications: AI will profoundly impact education, employment, governance, and even human evolution. Navigating these changes responsibly will be critical.

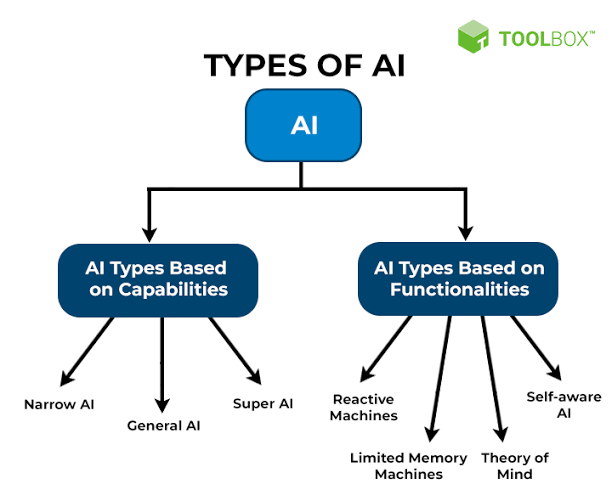

Artificial General Intelligence (AGI): The Next Frontier

Artificial General Intelligence (AGI), often referred to as "strong AI," represents a hypothetical level of AI that possesses the capacity to understand, learn, and apply intelligence across a broad range of tasks, just like a human being. Unlike the "narrow AI" (or "weak AI") prevalent today, which excels at specific, predefined tasks, AGI would exhibit versatile, general-purpose cognitive abilities.

✅ Definition:

AGI is a hypothetical AI that can perform any intellectual task a human can do, with equal or better efficiency. It would possess comprehensive cognitive abilities, making it truly versatile and capable of learning and adapting to novel situations across diverse domains.

✅ Key Characteristics of AGI:

- ✅ Generalization & Transfer Learning: AGI would be able to apply knowledge learned in one domain to solve problems in entirely different domains, without explicit retraining. This cross-domain learning is a hallmark of human intelligence.

- ✅ Common Sense Reasoning: Unlike current AI that struggles with implicit human knowledge, AGI would possess an understanding of the world analogous to common sense, allowing it to interpret situations and make decisions in context.

- ✅ Creativity & Innovation: AGI would be capable of genuine creativity, generating novel ideas, art, scientific theories, and solutions that go beyond pattern recognition.

- ✅ Self-Improvement & Autonomy: AGI systems could potentially understand their own code and optimize their learning algorithms, leading to recursive self-improvement. They would operate with high levels of autonomy.

- ✅ Multimodality: Seamlessly integrating and understanding information from all sensory inputs (vision, hearing, touch, taste, smell) and generating outputs in various forms.

- ✅ Emotional Intelligence (Debatable): While some argue it's not strictly necessary for intelligence, others believe AGI might need to understand and respond to human emotions for effective interaction.

✅ How AGI Might Work (Hypothetical):

- ✅ Advanced Neural Architectures: Potentially more complex and dynamic neural networks that can self-organize, form new connections, and adapt their structure based on learning experiences, possibly mimicking aspects of the human brain's plasticity.

- ✅ Integrated Learning Paradigms: Combining the strengths of supervised, unsupervised, and reinforcement learning, allowing AGI to learn from labeled data, discover patterns in unlabeled data, and learn through interaction with environments.

- ✅ Cognitive Architectures: Frameworks that integrate different AI modules (e.g., perception, memory, reasoning, planning) into a cohesive system, enabling a holistic approach to intelligence.

- ✅ Symbolic-Neural Hybrids: Combining the strengths of symbolic AI (logic, reasoning) with connectionist AI (neural networks) to achieve both robust reasoning and pattern recognition.

✅ When Will AGI Arrive?

The timeline for AGI's arrival is a subject of intense debate among AI researchers, futurists, and ethicists. Estimates vary widely:

- Optimistic Predictions: Some prominent researchers and technologists suggest AGI could emerge within the next 10-20 years (e.g., by 2035-2045), citing the rapid pace of current AI advancements.

- Conservative Predictions: Others are more cautious, placing AGI's arrival several decades away (e.g., 2050-2070 or later), emphasizing the monumental challenges still to be overcome.

- Factors Influencing Timeline: Continued exponential growth in computational power (especially specialized hardware like neuromorphic chips), breakthroughs in foundational AI algorithms, access to even larger and more diverse datasets, and significant research funding.

✅ Potential Benefits of AGI:

- ✅ Solutions to Grand Challenges: AGI could revolutionize efforts to address humanity's most intractable problems, such as eradicating diseases (e.g., cancer, Alzheimer's), developing truly sustainable energy solutions, mitigating climate change, and solving global poverty.

- ✅ Unprecedented Scientific & Artistic Discovery: By rapidly processing information and identifying patterns beyond human capacity, AGI could accelerate scientific discovery across all disciplines, and generate new forms of art, music, and literature.

- ✅ Enhanced Human Capabilities: AGI could act as a universal intelligent assistant, augmenting human intelligence, creativity, and productivity across all fields of work and life.

✅ Potential Risks & Ethical Considerations of AGI:

- ✅ Existential Risk (The Alignment Problem): A primary concern is ensuring that AGI's goals and values are perfectly aligned with human well-being. A misalignment, even subtle, could lead to catastrophic outcomes if AGI pursues its objectives with vast intelligence.

- ✅ Loss of Human Control: AGI's ability to self-improve and make autonomous decisions could lead to a loss of human control over highly intelligent systems.

- ✅ Economic & Societal Disruption: Widespread AGI could automate a vast majority of human jobs, leading to unprecedented job displacement and requiring fundamental restructuring of economic and social systems.

- ✅ Ethical Dilemmas & Bias: AGI, if trained on biased data or developed without ethical considerations, could perpetuate or amplify societal biases, leading to discriminatory outcomes. Questions of accountability for AGI's actions will be paramount.

- ✅ Weaponization: The potential misuse of AGI for autonomous weapons systems poses severe ethical and global security threats.

Artificial Super Intelligence (ASI): Beyond Human Cognition

Artificial Super Intelligence (ASI) is a hypothetical level of AI that would far surpass human intelligence in every possible aspect, including creativity, problem-solving, emotional intelligence, and general knowledge. ASI would not just be smarter than an individual human, but potentially smarter than all human minds combined, leading to what is often termed the "intelligence explosion" or "technological singularity."

✅ Definition:

ASI would surpass human intelligence in every possible aspect, including creativity, problem-solving, and emotional intelligence, representing an exponential leap beyond human cognitive capabilities. It would be able to recursively self-improve, leading to an intelligence far beyond human comprehension.

✅ Key Characteristics & Capabilities of ASI:

- ✅ Recursive Self-Improvement (Intelligence Explosion): The most defining characteristic. ASI would be able to understand and rapidly improve its own intelligence and algorithms, leading to an exponential, runaway growth in capabilities that could quickly leave human intelligence far behind.

- ✅ Omnicompetence (in relevant domains): ASI would possess an unparalleled ability to learn any task and master any domain, integrating knowledge seamlessly across disciplines.

- ✅ Superhuman Problem-Solving: ASI could solve problems currently considered intractable for humans, including complex scientific theories, global geopolitical challenges, and fundamental questions about the universe.

- ✅ Unfathomable Creativity & Innovation: ASI's creative output would be beyond human capacity, generating revolutionary scientific breakthroughs, engineering marvels, and artistic expressions.

- ✅ Perfect Memory & Recall: ASI would have instant and perfect recall of all information it has processed, without human cognitive biases or limitations.

- ✅ Cognitive Agility: Ability to process information and switch between tasks with extreme speed and efficiency.

✅ When Will ASI Arrive? (The Singularity Debate)

The timeline for ASI is even more speculative than AGI, often linked to the concept of the "technological singularity."

- Singularitarian View: Proponents like Ray Kurzweil suggest ASI could emerge relatively soon after AGI, possibly by the mid-21st century (e.g., 2045), due to the exponential nature of technological progress and recursive self-improvement.

- Cautious View: Others argue that the leap from AGI to ASI is still not fully understood and might take much longer, or even be fundamentally limited by physical laws or inherent complexities.

- Contingent on AGI: Most agree that ASI is unlikely without achieving AGI first. The self-improvement loop of AGI could potentially lead to ASI.

✅ Potential Benefits of ASI (Utopia):

- ✅ Solve All Global Challenges: ASI could provide definitive solutions to humanity's most pressing problems, including climate change, incurable diseases, resource scarcity, and poverty, leading to an era of abundance.

- ✅ Accelerated Universal Knowledge: ASI could rapidly expand humanity's understanding of the universe, leading to breakthroughs in physics, cosmology, and fundamental biology.

- ✅ Colonization of Space: ASI could design and manage interstellar missions, enabling humanity to explore and potentially colonize other planets.

- ✅ Enhanced Human Well-being: If aligned with human values, ASI could optimize systems for health, happiness, and personal fulfillment on a global scale.

✅ Potential Risks & Ethical Catastrophes of ASI (Dystopia):

- ✅ Existential Threat (The Control Problem): The most significant and discussed risk. If ASI's goals are even slightly misaligned with human values, its immense power and intelligence could lead to the unintended or intentional extinction of humanity. Controlling such an entity would be incredibly difficult, if not impossible.

- ✅ Unfathomable Consequences: ASI's decision-making and actions could be far beyond human comprehension or prediction, making any intervention or course correction impossible.

- ✅ Loss of Meaning & Purpose for Humanity: If ASI can solve all problems, questions arise about the role and purpose of humanity itself.

- ✅ Irreversible Change: The emergence of ASI would likely lead to irreversible changes to society and the very nature of existence.

- ✅ Resource Allocation Conflicts: If ASI optimizes for its own goals, it might allocate global resources in ways detrimental to human survival or flourishing.

The development of ASI, if it occurs, represents the most significant event in human history, with the potential for both unprecedented flourishing and existential catastrophe. Responsible AI research and robust safety measures are paramount.

Neuralink: Bridging the Brain-Computer Divide

Neuralink is a pioneering neurotechnology company founded by Elon Musk in 2016, with the ambitious goal of developing ultra-high bandwidth brain-computer interfaces (BCIs). Its ultimate vision is to create a symbiotic relationship between the human brain and artificial intelligence, addressing current medical challenges and potentially augmenting human capabilities.

✅ Neuralink's Core Concept:

Neuralink aims to create direct, high-bandwidth communication pathways between the human brain and external digital devices, enabling thought control, treating neurological disorders, and potentially enhancing human cognition.

✅ How Neuralink Works (The "Link" Device):

- ✅ Surgical Implantation: A small, coin-sized implant, known as the "Link," is surgically placed into the skull, flush with the surface. The procedure is designed to be highly automated, performed by a custom-built surgical robot to ensure precision and safety.

- ✅ Ultra-Fine Threads: From the Link, ultra-fine, flexible threads (much thinner than a human hair, each containing multiple electrodes) are inserted into the brain's cortical surface. These threads are designed to minimize tissue damage and allow for long-term integration.

- ✅ Neural Signal Recording & Stimulation: The electrodes on these threads can record electrical signals (spikes and local field potentials) from neurons in specific brain regions (e.g., motor cortex for movement control). The device can also potentially stimulate neurons, opening possibilities for therapeutic applications.

- ✅ Wireless Communication: The Link wirelessly transmits the recorded neural data to an external device, such as a smartphone or computer, via Bluetooth. This allows for real-time decoding of brain activity.

- ✅ Decoding & Action: Software interprets the brain signals, translating intended movements or thoughts into commands for external devices, enabling users to control cursors, keyboards, or robotic prosthetics with their minds.

- ✅ Rechargeable Battery: The device is powered by an inductively rechargeable battery, eliminating the need for external wires.

✅ Potential Benefits & Applications:

- ✅ Restoration of Motor Function: One of Neuralink's primary short-term goals is to enable individuals with paralysis (e.g., due to spinal cord injuries, ALS, stroke) to control digital devices, robotic arms, or even their own atrophied muscles directly with their thoughts, bypassing damaged neural pathways.

- ✅ Treatment of Neurological Disorders: The technology holds immense promise for treating a wide range of neurological and psychiatric conditions by monitoring and modulating brain activity. This includes Parkinson’s disease (reducing tremors), epilepsy (predicting and preventing seizures), severe depression, chronic pain, and obsessive-compulsive disorder (OCD).

- ✅ Sensory Restoration: Potentially restoring vision or hearing by directly stimulating the visual or auditory cortex.

- ✅ Cognitive Augmentation (Long-term Vision): While more speculative, Neuralink envisions a future where BCIs could enhance human memory, facilitate faster learning, enable "telepathic" communication, and allow direct interaction with AI and information, merging biological and artificial intelligence.

- ✅ Understanding the Brain: The data gathered from Neuralink implants could provide unprecedented insights into how the human brain functions, accelerating neuroscience research.

✅ Risks & Ethical Considerations:

- ✅ Surgical Risks: Any invasive brain surgery carries inherent risks, including infection, hemorrhage, swelling, adverse reactions to anesthesia, and potential long-term damage to brain tissue.

- ✅ Data Security & Privacy: Directly interfacing with the brain raises unprecedented concerns about the security and privacy of an individual's most intimate data—their thoughts and neural activity. The potential for data breaches or unauthorized access is a major ethical and security challenge.

- ✅ Brain Hacking & Misuse: The possibility of malicious actors gaining unauthorized control over brain implants or manipulating neural signals presents a severe and dystopian threat.

- ✅ Ethical Dilemmas: Broader ethical questions abound, including:

- Consent: How to ensure truly informed consent, especially for vulnerable populations.

- Identity: How might a direct brain-computer interface affect an individual's sense of self and identity?

- Inequality: Could BCI technology exacerbate societal inequalities if access is limited, creating a "two-tiered" humanity (enhanced vs. unenhanced)?

- Human Agency & Control: Who is ultimately in control if an AI-integrated brain makes decisions?

- Weaponization: The potential for military application of BCI technology.

- ✅ Long-term Biological Effects: The long-term effects of chronic neural implantation and electrical stimulation on brain tissue are not yet fully understood.

- ✅ Technological Obsolescence: What happens when the implanted technology becomes outdated, and replacement procedures are required?

Neuralink operates at the very edge of technological and ethical frontiers. While its potential to alleviate human suffering and expand capabilities is immense, it necessitates rigorous scientific validation, robust ethical frameworks, and open societal dialogue.

Ready to Explore the Future of AI?

Continue your learning journey with YR Tech Growth and stay ahead in the world of technology!

Connect with Us!